Home · Publications · More

|

I am a Ph.D. student at MMLab@NTU, Nanyang Technological University,

supervised by Prof. Ziwei Liu.

My research focuses on 3D human modeling. I am particularly interested in the perception and synthesis of human motion and human-environment interactions.

|

|

News

[2024-04] Our team (Chenyang, Jiawei and I) achieved a tied 26th place in the 46th ICPC World Final.

[2024-02] One paper (Digital Life Project) accepted to CVPR 2024.

[2024-01] One paper (MotionDiffuse) accepted to TPAMI.

[2023-12] I obtained SDSC Dissertation Research Fellowship (10 people nationwide).

[2023-11] Recognized as a Top Reviewer in NeurIPS 2023.

[2023-09] Two papers, FineMoGen and InsActor, accepted to NeurIPS 2023.

[2023-09] One paper (SMPLer-X) accepted to NeurIPS 2023 Datasets and Benchmarks track.

[2023-07] One paper (ReMoDiffuse) accepted to ICCV 2023.

[2023-04] One paper (BiBench) accepted to ICML 2023.

[2023-01] I gave an invited talk on MotionDiffuse and AvatarCLIP in MPI.

[2022-12] I led NTU ICPC teams to win the first and second prizes in 2022 Manila ICPC Regional Contest.

[2022-07] One paper (HuMMan) accepted to ECCV 2022 for Oral presentation.

[2022-05] One paper (AvatarCLIP) accepted to SIGGRAPH 2022 (journal track).

[2022-03] Two papers, Balanced MSE (Oral) and GE-ViTs, accepted to CVPR 2022.

[2022-01] One paper (BiBERT) accepted to ICLR 2022.

[2021-08] Start my journey at MMLab@NTU!

Selected Publications [Full list]

* indicates equal contribution, ✉ indicates corresponding / co-corresponding author

|

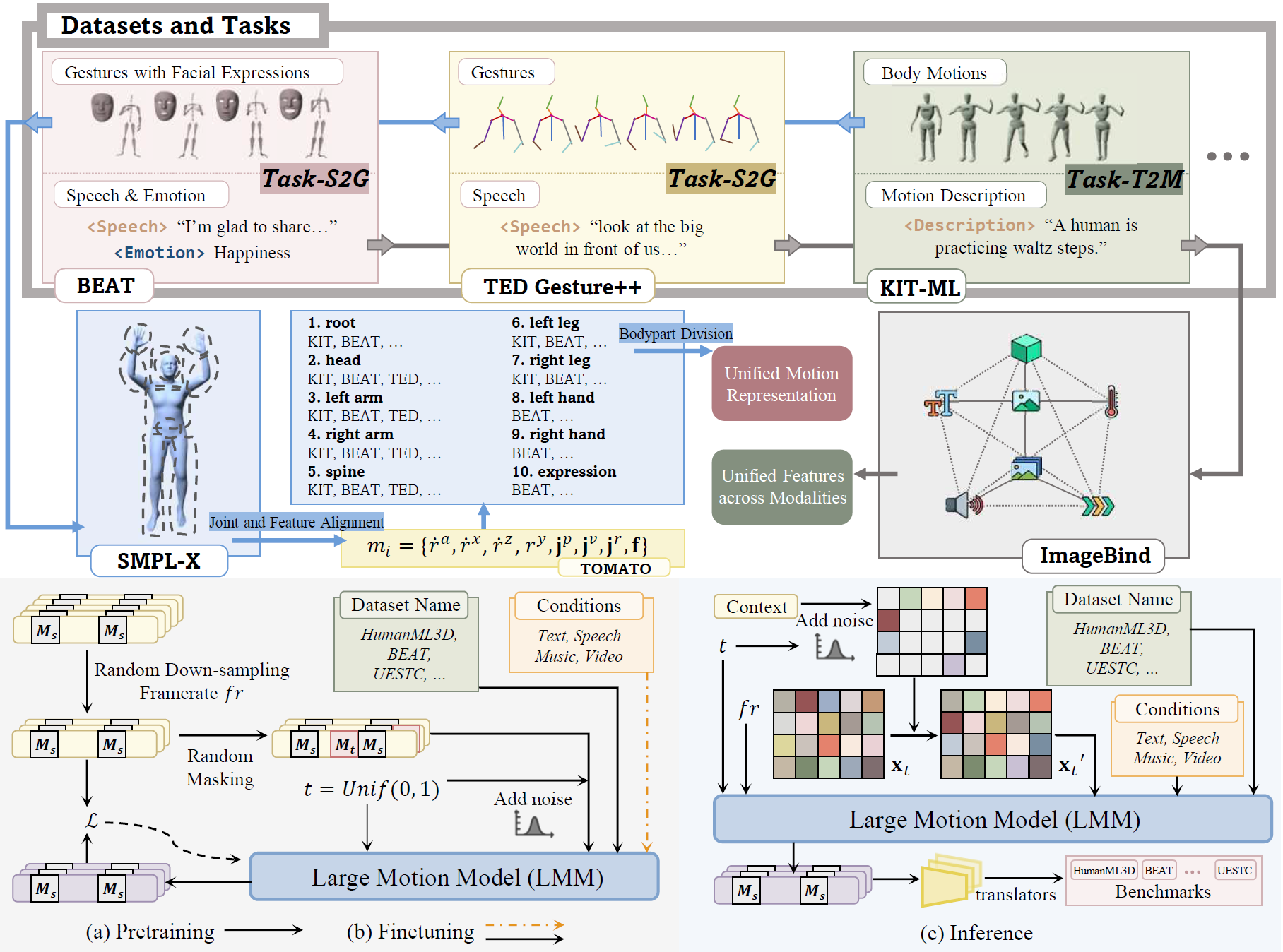

arXiV, 2024 [Paper] [Project Page] [Video] [Code] Star The Large Motion Model (LMM) unifies various motion generation tasks into a scalable, generalist model, demonstrating broad applicability and strong generalization across diverse tasks.

|

|

Conference on Computer Vision and Pattern Recognition (CVPR), 2024 [Paper] [Project Page] [Code] Digital Life Project is a framework utilizing language as the universal medium to build autonomous 3D characters, who are capable of engaging in social interactions and expressing with articulated body motions, thereby simulating life in a digital environment.

|

|

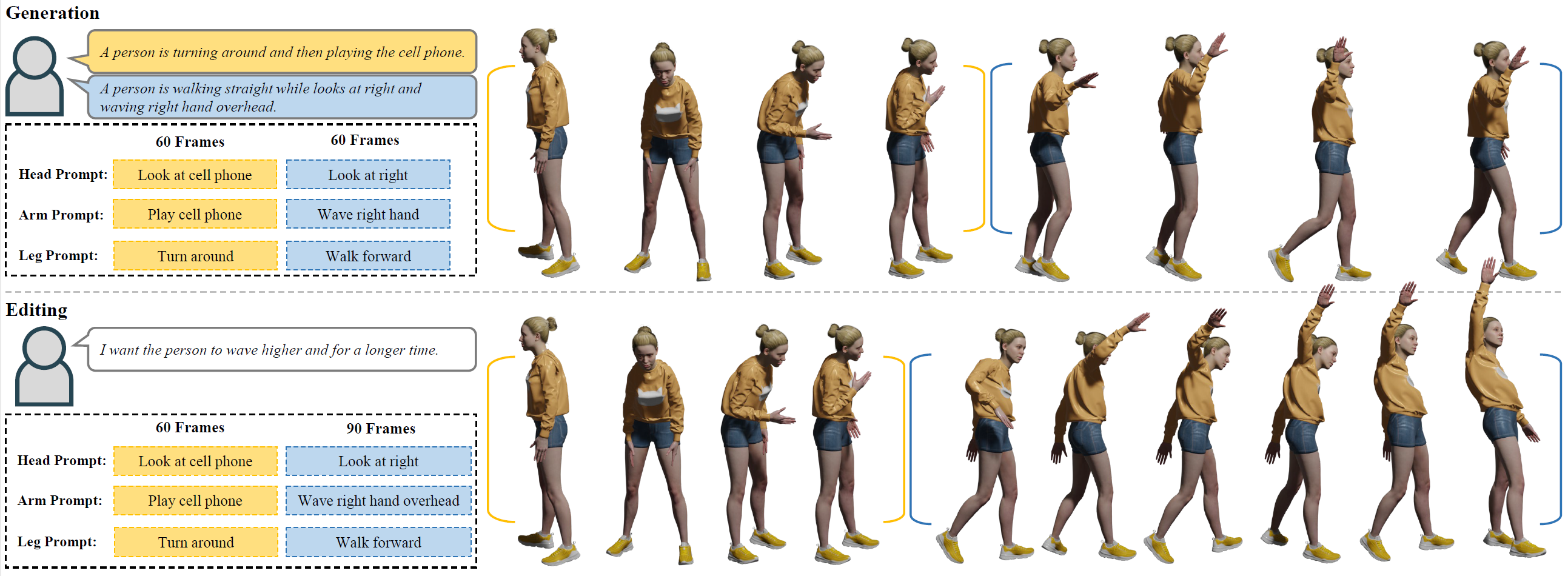

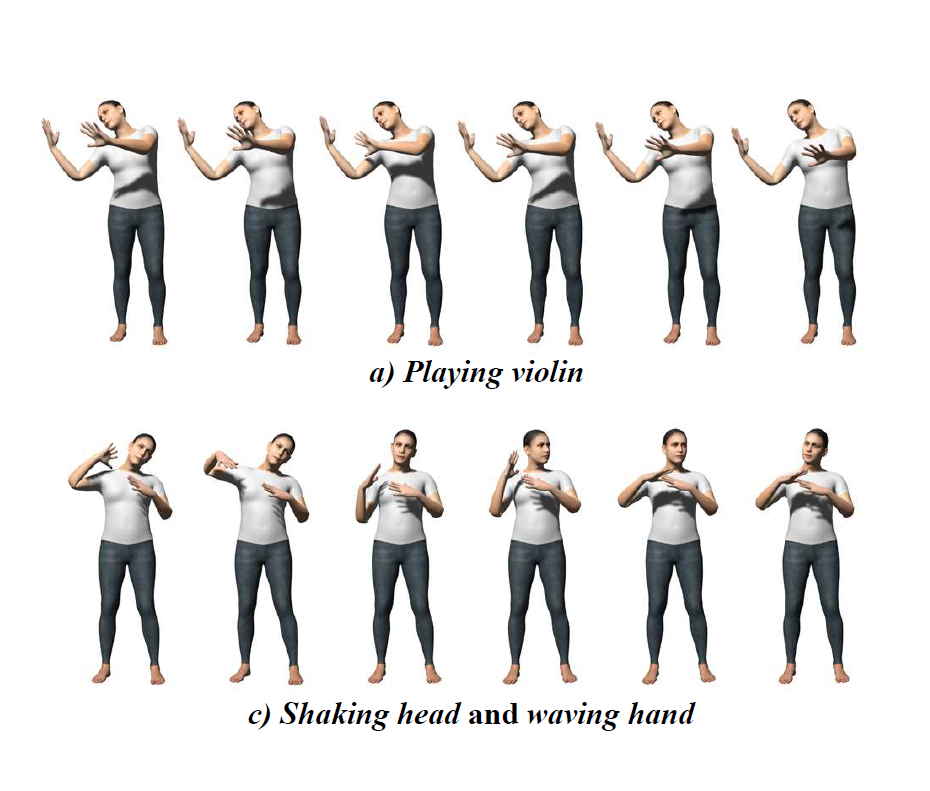

Neural Information Processing Systems (NeurIPS), 2023 [Paper] [Project Page] [Code] Star FineMoGen is a diffusion-based motion generation and editing framework that can synthesize fine-grained motions, with spatial-temporal composition to the user instructions.

|

|

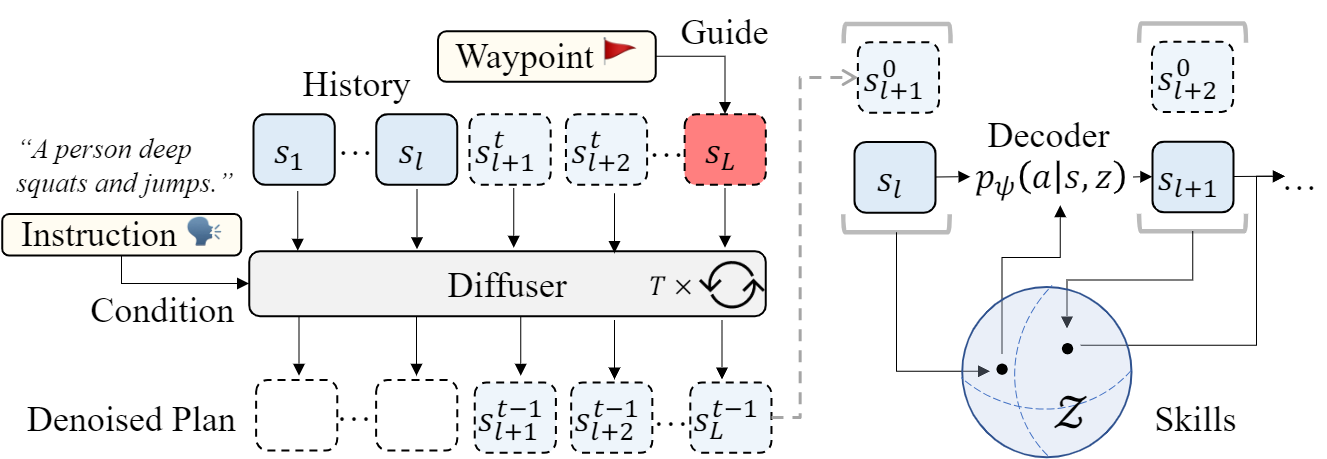

Neural Information Processing Systems (NeurIPS), 2023 [Paper] [Project Page] [Code] Star InsActor is a principled generative framework that leverages recent advancements in diffusion-based human motion models to produce instruction-driven animations of physics-based characters.

|

|

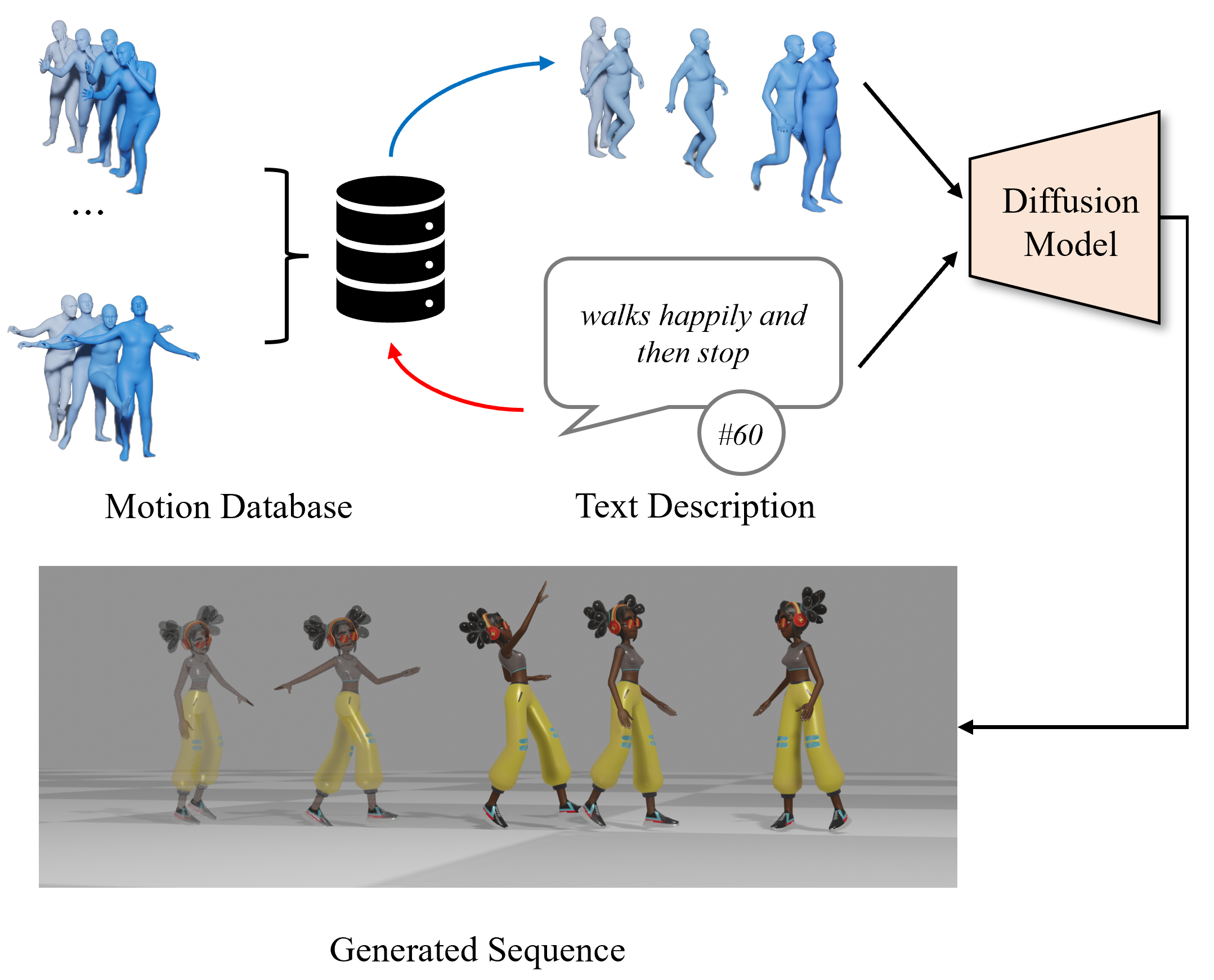

International Conference on Computer Vision (ICCV), 2023 [Paper] [Project Page] [Video] [Code] [Colab Demo] [Hugging Face Demo] Star ReMoDiffuse is a retrieval-augmented 3D human motion diffusion model. Benefiting from the extra knowledge from the retrieved samples, ReMoDiffuse is able to achieve high-fidelity on the given prompts.

|

|

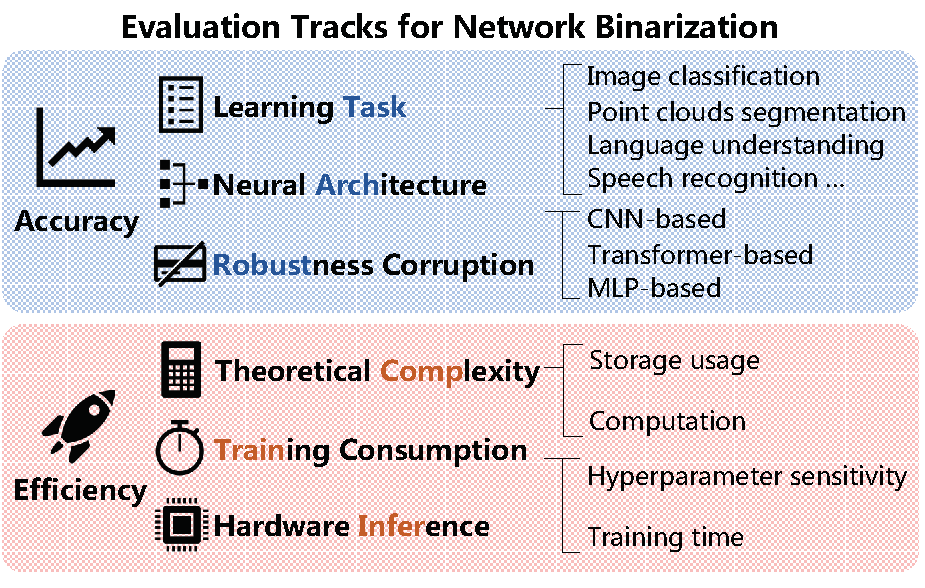

International Conference on Machine Learning (ICML), 2023 [Paper] A rigorously designed benchmark with in-depth analysis for network binarization. It first carefully scrutinizes the requirements of binarization in the actual production and define evaluation tracks and metrics for a comprehensive and fair investigation.

|

|

Transactions on Pattern Analysis and Machine Intelligence (TPAMI), 2024 [Paper] [Project Page] [Video] [Code] [Colab Demo] [Hugging Face Demo] Star The first text-driven motion generation pipeline based on diffusion models with probabilistic mapping, realistic synthesis and multi-level manipulation ability.

|

|

ACM Transactions on Graphics (SIGGRAPH), 2022 [Paper] [Project Page] [Video] [Code] [Colab Demo] Star AvatarCLIP is the first zero-shot text-driven pipeline, which empowers layman users to generate and animate 3D Avatars by natural language description.

|

|

|

Conference on Computer Vision and Pattern Recognition (CVPR), 2022 (Oral Presentation) [Paper] [Project Page] [Talk] [Code] [Hugging Face Demo] Star A statistically principled loss function to address the train/test mismatch in imbalanced regression, coincides with the supervised contrastive loss.

|

|

Conference on Computer Vision and Pattern Recognition (CVPR), 2022 [Paper] [Code] Star A systematical comparison of the generalization ability between CNNs and ViTs. Three representative generalization-enhancement techniques are applied to ViTs to further explore their inner properties.

|

|

Zhongang Cai*,

Mingyuan Zhang*,

Jiawei Ren*,

Chen Wei,

Daxuan Ren,

Zhengyu Lin,

Haiyu Zhao,

Lei Yang,

Chen Change Loy,

Ziwei Liu✉

arXiV, 2021 [Paper] [Code] Star A large-scale synthetic human dataset collected using GTA-5 game engine, providing stable performance boost to both frame-based and video-based HMR.

|

|

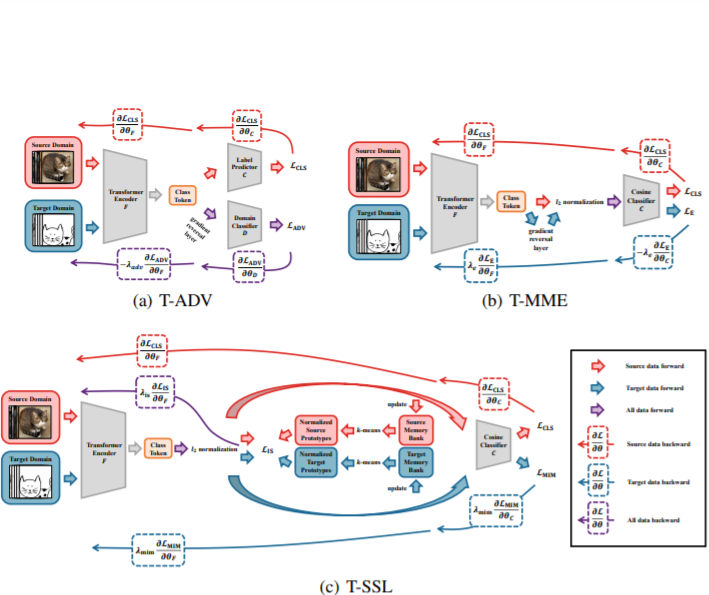

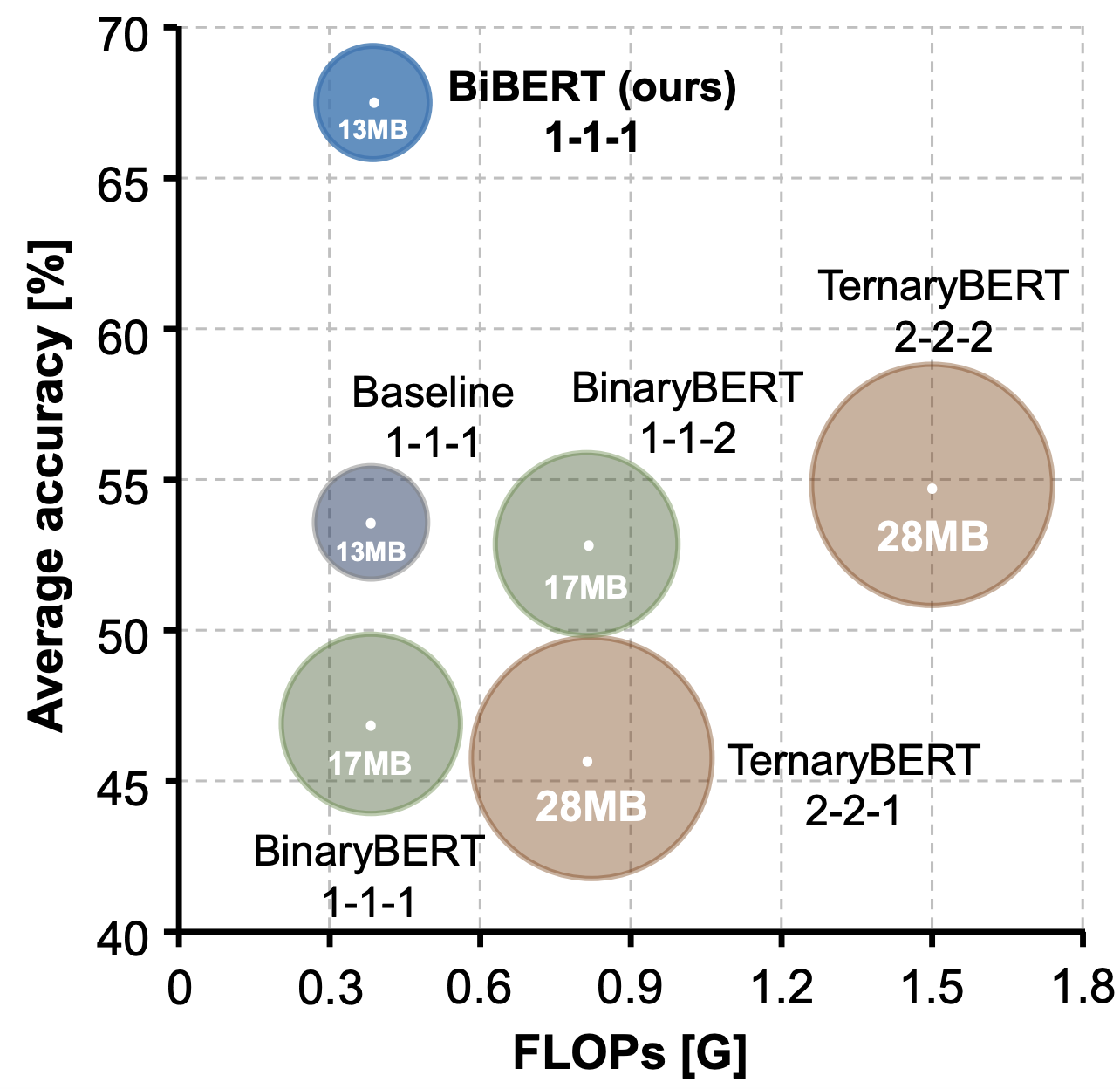

International Conference on Learning Representations (ICLR), 2022 [Paper] [Code] Star BiBERT is the first fully binarized BERT. It introduces an efficient Bi-Attention structure and a DMD scheme, which yields impressive 59.2x and 31.2x saving on FLOPs and model size.

|

|

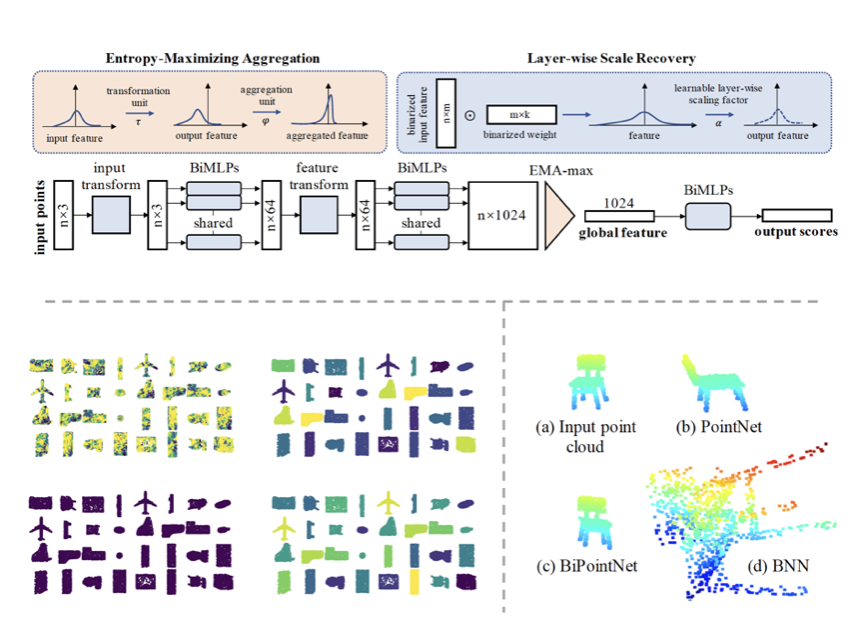

International Conference on Learning Representations (ICLR), 2021 [Paper] [Code] Star BiPointNet is the first fully binarized network for point cloud learning. BiPointNet gives an impressive 14.7x speedup and 18.9x storage saving on real-world resource-constrained devices.

|

|

Winter Conference on Applications of Computer Vision (WACV), 2021 [Paper] [Code] Star Efficient Attention reduces the memory and computational complexities of the attention mechanism from quadratic to linear. It demonstrates significant improvement in performance-cost trade-offs on a variety of tasks including object detection, instance segmentation, stereo depth estimation, and temporal action lcoalization.

|

Updated: 2024-5-8